A short story by Nikhil Malhotra, Head of Maker’s Lab at Tech Mahindra

INTRODUCTION

We are at the cusp of a new technological revolution powered by virtualization, SDN/NFV and 4G LTE. The action is being further amplified by 5G, which is set to redefine business across the globe. Thanks to this trend, the next wave of digitalization driven by artificial intelligence and machine learning is simmering in the future. This is where organizations are expected to face their next immediate challenge in trying to understand natural language and intent of customers using deep neural network techniques. We are still a far cry from making machines that can “understand” and deliver a response to fit our expectations. However, efforts are already underway taking us in the right direction.

The new environment is turning most traditional ways of doing things obsolete. At its peak, we expect many traditional operations to undergo a radical transformation with the application of speech recognition, natural language processing, artificial intelligence, analytics and machine learning. This brings us to some emerging new roles to facilitate machine learning (helping machines become intelligent) and analytics.

In this digital age, the enterprise is also experiencing a marked shift in the consumer purchase cycle. It is impacting the way marketers choose to manage media and publishing – a key aspect of promoting mass consumption. Given this trend, marketers are shifting their attention to on-going customer experiences. This heralds a critical shift in consumer trust from published media to peer opinion, which is what is driving service uptake and loyalty.

This has a major impact on how we view digital, leading to an altogether new way of creating content and services, and dramatically changing the traditional customer-supplier equation. It is increasingly driven by these evolving technologies that offer new lifestyle and insights

Also, it should be noted that the era of customers reaching out to the supplier is long gone. Now it is the supplier that must seek out the customer, and do so through peer influence, and not using conventional media.

Today, the word ‘convenience’ has assumed an all-important role in not just discovering new services but also in delivering and managing them. Product life-cycles have become shorter, placing a very high demand on traditional ways of creating and delivering products. More often than not, the very nature of time required to release a product makes it obsolete by the time it reaches the market.

This is where convenience can manifest through data-driven personalization. And, in this context, data means patterns that a machine would understand to first automate mundane processes to then drive business efficiency.

FUTURE CONVENIENCE VIA AI

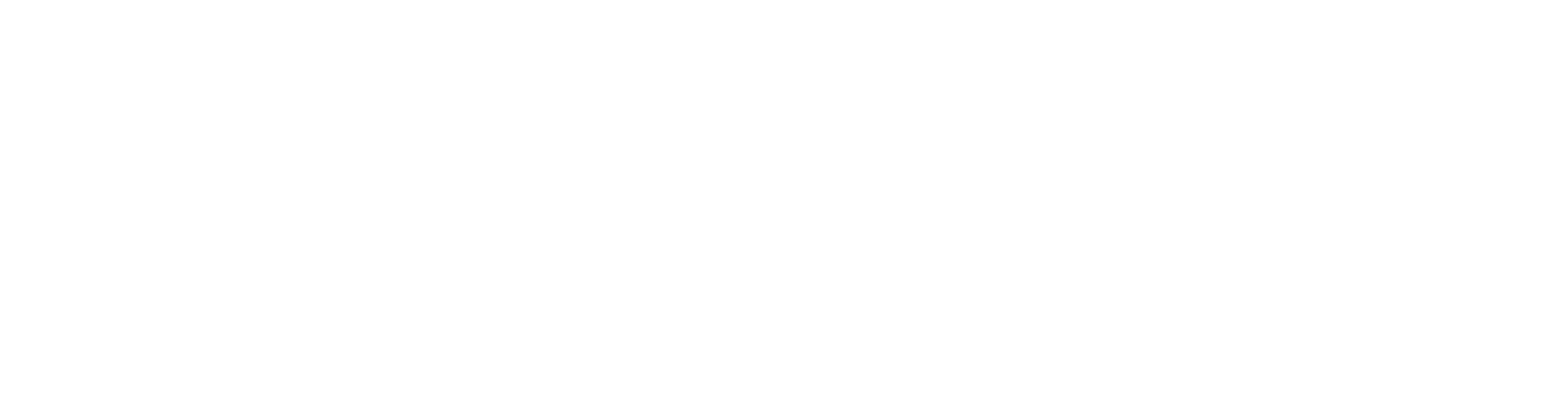

Digital information coupled with personalized perception, learning and even prescription is the future we are looking at. Today we are at a “scale 2” where organizations have realized the story and are pushing towards automation. The next level that we call as “scale 3” would see hyper-automation followed by “scale 4” where we see Cognition

Figure 1: This is the writer’s prediction based on the trends and experience.

The range of AI applications that we are looking from a commercialization standpoint include the following:

- We are looking at fluid operations in call centers using natural language processing via machine learning and AI.

- The onset of bots in how we do operations, be they rail ticketing, getting content on devices, getting directions to a restaurant, etc.

- We are looking at an onset of robots in homes taking your EMRs and raising alarms when it concerns a citizen’s wellness.

- We are looking at machines using adversarial neural networks to try to be better at a task, like coding, calculating costs and even project management.

As far as jobs are concerned, we are looking at a completely new class emerging, the “New collared jobs” and yes it involves re-skilling and to some extent dismantling the current job structure of frameworks. The traditional worker model will be disrupted in the coming 5 years. A new job role will emerge and that will be a “Neuro Linguistic Programmer” as I call it, a person who knows how to talk to machines and understand the vastness of information that comes out of these machines.

WHAT IS THE REALITY?

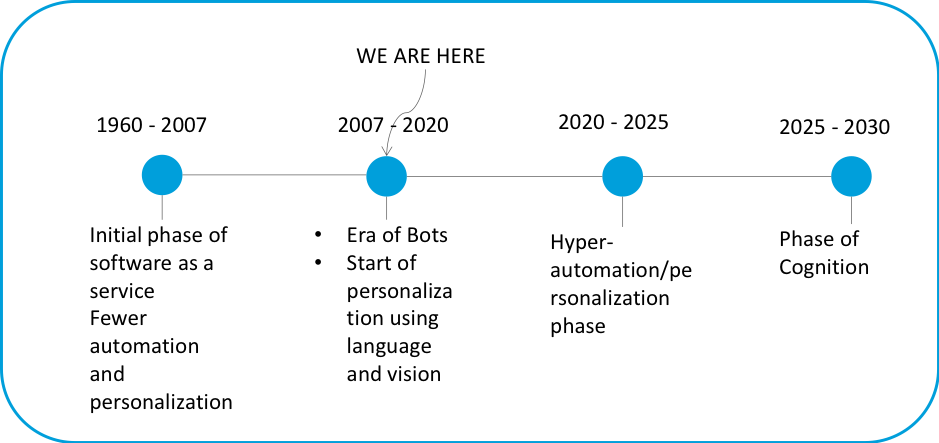

It is prudent to check what the actual reality is which is likely to be far from the truth. The figure below showcases the trends in 2017 as a report from McKinsey and Company:

While there have been fantastic investments from the big 5 in AI, the adoption has been very low. Most industry champions have been reluctant to apply AI and machine learning for customer-facing tasks, primarily because the mechanism to test the efficacy of output is a big question at this time. Some other trends that are visible in the market today are as follows:

While there have been fantastic investments from the big 5 in AI, the adoption has been very low. Most industry champions have been reluctant to apply AI and machine learning for customer-facing tasks, primarily because the mechanism to test the efficacy of output is a big question at this time. Some other trends that are visible in the market today are as follows:

- There has been a lack of a unified framework for A.I. and a common ecosystem. The current trend of AI has been clustered with PhD students and scientists trying to improve the algorithm by a percentage value. There is a lack of a common ecosystem for developers to use AI algorithms. Developers today utilize different toolsets – (Caffe, Tensorflow, Python, Keras, etc.) to make machine learning models.

- Commercial deployments of AI in areas of speech and image processing have primarily been from the big 5 software houses. Research on these techniques started some 3 decades ago but the usage in a production environment has been limited.

- Industry suffers from a severe lack of AI innovation, particularly in communications and networking, thus prompting data and voice providers to look at old statistical automation techniques to achieve desired results. This has led to more rules-based systems than a pattern matching system which is being proposed.

- Deployment of AI systems continues to be difficult with models while integration with different workflows still remains amongst the biggest challenges.

ACUMOS – NEED FOR HARMONIZING AI

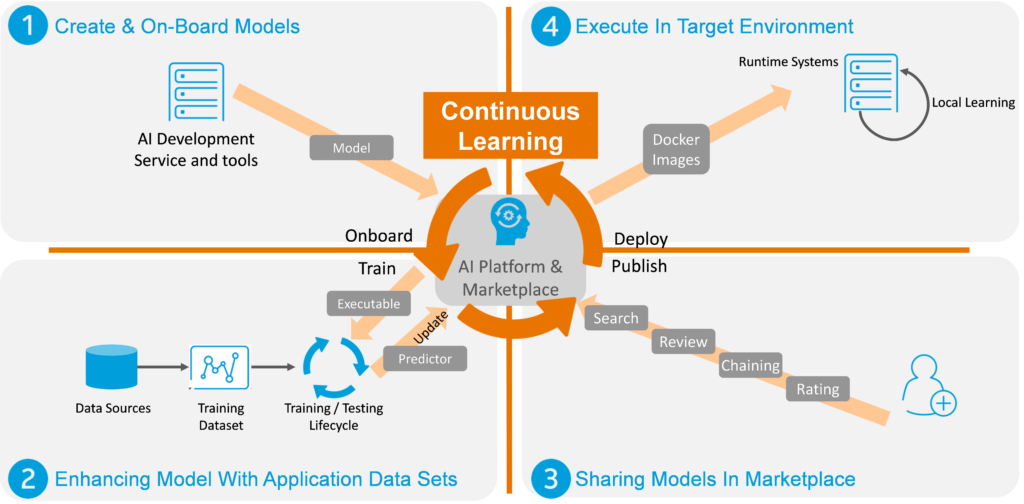

The above challenges prompted Tech Mahindra and AT&T’s research wings to look at the problem differently. The idea that emerged was to build a system that would serve to harmonize the dysfunctional world of AI imagine if developers across the world had a common platform to look at models, download these models, test them, share them back by updating and also stitch different models together; this was the thought that triggered the creation of ACUMOS.

When the two teams lingered on this thought, a set of guiding principles emerged. There were many to start with, but the teams settled on four such guiding principles or mantras to ensure that the world of AI creates the desired impact, post-ACUMOS. These principles are as follows:

- Acumos would provide a common open source framework of AI. A framework where freelance developers and developers from various organizations could see practical models being built, shared and utilized. The goal of the framework is to have models that could be practically utilized in a production environment to attain business efficiency.

- It would be a distributed marketplace with a federated structure. A world which is open to anyone to look at and a world which I wanted to keep closely knitted to a certain set of developers.

- The framework would allow the creation of interoperable microservices as deployable units. With this, services could solve only one specific use case at one time and the services that would be easily utilized by others. This has a wider connotation. In today’s world, different developers are using different toolkits to generate models. Let’s explain it via an example. A developer manages to create a model to understand image category using convolutional neural network using a Tensor Flow toolkit. Another developer uses scikit learn to create models to understand sentiments on an image base. Now, Acumos considers these two pieces as two interoperable services. By a simple drag and drop, an organization or a developer can combine these two complex models (viz. model to find objects and model to recognize sentiments) to create a complex solution. This complex solution now becomes a solution for an ad agency to find out the efficacy of an advertisement placed for its users.

- A framework that would expedite innovation.

A pictorial representation of tenets would appear as follows:

Figure 3: Acumos marketplace features

- Ability to create and onboard models

- Ability to download models, train with your own data sets

- Share the models with a targeted group or the world

- Ability to chain complex models

- Execute in a targeted environment such as a Docker image

With the ACUMOS platform, we’re working to create an industry standard for making AI apps reusable and easily accessible to any developer. AI tools today can be difficult to use and often are designed for data scientists. The ACUMOS platform will be user-centric, with a focus on creating apps, microservices and an ability to stitch models to create complex services for a business.

THE NEXT FRONTIER

Well, another question that is often asked is whether the world is doomed, and we are looking at another SKYNET?

I don’t think we are even near to something like this in the next 200 years. The chances for the human race to experience a crisis due to weapons is a more likely cause of destruction than AI components. The reason is very simple; what we have achieved from statistical models and machine learning /deep learning has still not gotten us to a very advanced AI. Also, AGI (artificial general intelligence) still remains a pipe dream and it involves many factors to make it a reality.

Today, machines are capable of doing certain tasks exceedingly well. Give it a pattern and data to crunch upon, and a machine will also classify and predict outcomes. This is nowhere close to how the human brain works. We are far cognitive and emotional beings who house our intelligence in a small portion of our brain called the ‘neocortex’. It is the neocortex which is involved in making cognitive decisions be it our faith, our emotional responses or our response to the society at large. In the future, what we build with machines will eventually get closer to this cognition. The reptilian part of the brain which is an appendage from the reptilian brain is not currently being positioned in AI.

Our harmonization via ACUMOS can see a breed of new algorithms emerge, and here we are talking about algorithms other than the neural networks that are our mainstay today.

CONCLUSION

A change is on the horizon, and that change looks ominous. We are looking at a world where humans will work together with machines in doing some of the mundane tasks we today perform. However, we need to embed that change in our psyche and work towards more AI + IA, that would enable us to intelligently augment machines. For this, we need a common ecosystem and harmony amongst developers, researchers and hobbyists alike to create a standard for the world to work with.

ABOUT THE AUTHOR

“We are reminded of the limitless-ness of human curiosity when we see man and machine create marvels for the future together,” is the quote Nikhil Malhotra lives by.

Nikhil Malhotra is the head of Maker’s Lab, a unique Thin-q-bator space within Tech Mahindra with over 17+ years of experience in a variety of technology domains.

In his present avatar, he is the head of Tech Mahindra’s R&D space called the Makers Lab which he created in 2014. The lab focuses on artificial intelligence, robotics and mixed reality. Nikhil’s area of personal research has been natural language processing, enabling machines to talk the way humans do. Nikhil has also designed an indigenous robot in his lab, as a personal assistant.

He lives by a dream of creating smart machines that would wed human emotions with artificial intelligence to make lives better.

He is also a leading speaker on digital transformation, practical use of AI and its future.

He holds a Master’s degree in computing with specialization in distributed computing from Royal Melbourne Institute of Technology, Melbourne.

Nikhil currently resides in Pune with his wife Shalini and son Angad.